The Rise of AI Impersonation: A New Era of Identity Fraud

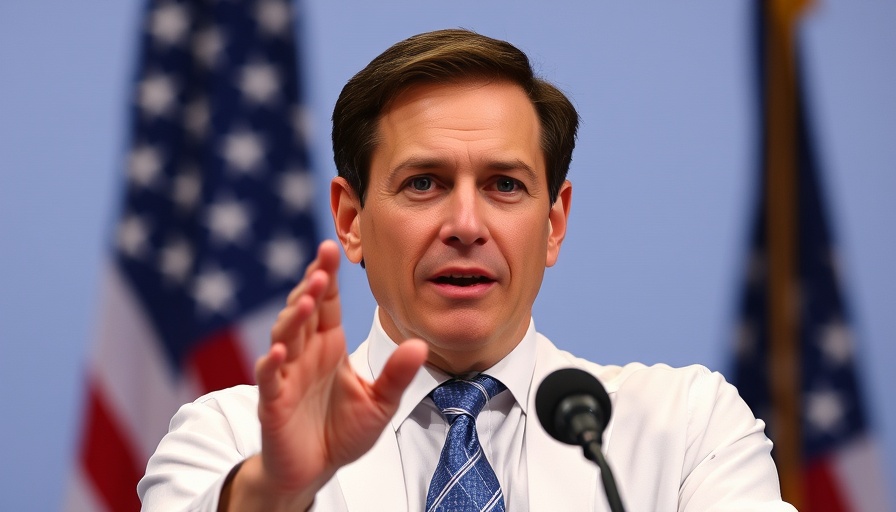

In a startling incident reported by major news outlets, an unidentified fraudster has leveraged artificial intelligence to impersonate U.S. Secretary of State Marco Rubio. This breach involved sophisticated techniques, where the perpetrator sent fake voice messages and text communications that mimicked Rubio's distinctive voice and writing style. These messages reached at least five high-ranking officials, including foreign ministers and a U.S. governor, and aimed at extracting sensitive information from these political figures.

This incident highlights a growing global concern over AI impersonation techniques, which have been increasingly reported among government agencies. From deceptive emails to fabricated phone calls, cybercriminals are adopting advanced AI methods to bypass traditional security measures. With Rubio's impersonator reportedly active since mid-June, this event marks a pivotal moment that showcases the need for heightened awareness and security protocols regarding AI technologies.

Why It Matters: The Impact of AI on Democracy

As political figures are targeted through AI impersonation, the implications stretch beyond breaches of privacy; they challenge the very foundations of democracy. David Axelrod, a former senior adviser to Barack Obama, emphasized the urgency of addressing these threats. The ability for anyone, even malicious actors, to replicate influential voices raises serious concerns regarding misinformation and manipulation in political discourse. In an era where trust in information is already fragile, these developments could undermine public confidence in democratic processes.

Learning from the Moment: Basics of AI and Cybersecurity

This incident serves as a critical lesson for those unfamiliar with the essentials of artificial intelligence. For tech enthusiasts and professionals alike, understanding the fundamentals of AI is essential not only for personal knowledge but also to enhance organizational security measures. Concepts such as machine learning and deep learning provide the foundation for understanding how these scams are orchestrated. Improved AI literacy can empower individuals and organizations to recognize potential scams and mitigate risks effectively.

Strategies for Protecting Against AI Scam Threats

To combat the rising threat of AI impersonation, cybersecurity awareness must be increased within organizations. A few essential strategies include:

- Awareness Training: Provide regular training sessions highlighting the dangers of AI impersonation and phishing scams.

- Multi-Factor Authentication: Enhance access security by requiring multiple verification steps for accessing sensitive government communications.

- Verification Protocols: Establish procedures for verifying communications from known figures, including direct calls or emails to confirm identity.

By adopting a proactive approach, organizations and individuals can better prepare to face the evolving landscape of AI technology and its implications.

Looking Ahead: Future of AI and Cybersecurity

The world of artificial intelligence is rapidly evolving, and with it, the challenges surrounding cybersecurity. As AI technologies advance, the potential for their misuse will inevitably grow. For young innovators and tech enthusiasts, understanding and adapting to these changes is crucial. We must continue to explore and educate ourselves about the ethics and responsibilities that come with AI development.

In conclusion, the incident involving AI impersonation raises significant questions regarding technology's influence on society and its security. As we advance into an era where AI plays a vital role in communication, developing robust defenses against impersonation and misinformation becomes not just smart but essential.

Add Row

Add Row  Add

Add

Add Row

Add Row  Add

Add

Write A Comment